This is bad.

Racist Cars

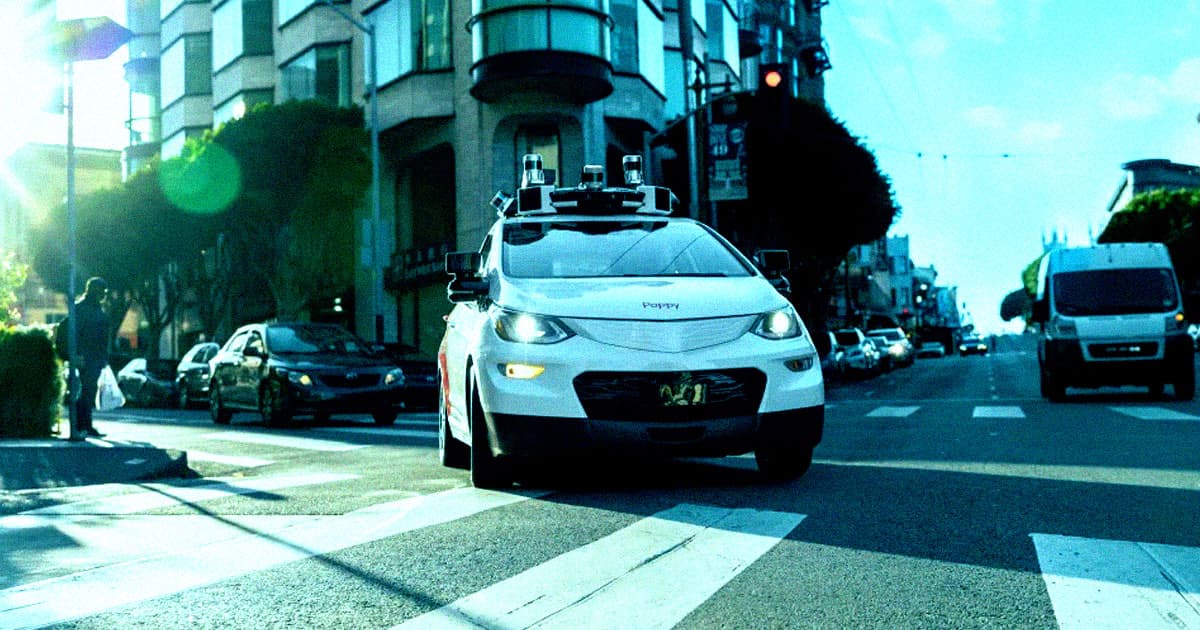

Driverless cars have been stealing headlines by causing traffic jams, getting stuck in cement, and getting pulled over by very confused police. But according to a recent study, these basic technological failures hardly scratch the surface of more insidious problems that may lurk within autonomous vehicles' underlying tech.

According to the not-yet-peer-reviewed study, conducted by researchers at King's College in London, a fairness review of eight different AI-powered pedestrian detectors trained on "widely-used, real-world" datasets revealed the programs to be much worse at detecting darker-skinned pedestrians than lighter-skinned ones, with the system failing to identify darker-complexioned individuals nearly eight percent more often than their lighter-skinned counterparts.

That's a staggering figure — and speaks to the real, and potentially deadly, dangers of biased AI systems.

Ominous Implications

According to the study, researchers first engaged in extensive data annotation, marking a total of 8,111 images with "16,070 gender labels, 20,115 age labels, and 3,513 skin tone labels."

From there, it was just a statistics game, with the researchers ultimately finding a 7.52 detection accuracy disparity between light- and dark-skinned folks. And according to the research, the danger for darker-skinned folks went up significantly in "low-contrast" or "low-brightness" settings — or, in other words, at night.

But that wasn't all. In addition to this racial bias, the detectors had another collective — and concerning — blindspot: children, who results revealed were twenty percent less likely to be identified by the detectors than adults.

It's important to note that none of the systems that were tested were actually those belonging to driverless car companies, as such information generally falls under the "proprietary information" umbrella. But as Jie Zhang, a Kings College lecturer in computer science and a co-author on the study, told New Scientist, those firms' models probably aren't that far off — and considering that driverless vehicles are starting to win major regulatory victories, that's deeply unsettling.

"It's their confidential information and they won't allow other people to know what models they use, but what we know is that they're usually built upon the existing open-source models," said Zhang. "We can be certain that their models must also have similar issues."

It's no secret that machine bias is a serious issue, and the implications are only getting clearer as advanced AI tech becomes increasingly integrated into everyday life. But with lives literally on the line, these kinds of biases aren't something that we should wait for regulation to catch up to in the aftermath of preventable tragedies.

More on autonomous vehicles: Self-Driving Car Gets Stuck in Freshly Poured Concrete

Share This Article