An official New York City chatbot designed to advise local business owners and landlords on city laws appears to have little to no understanding of said city laws, according to a report from The Markup — and is actually in some cases encouraging landlords and small businesses to break the law.

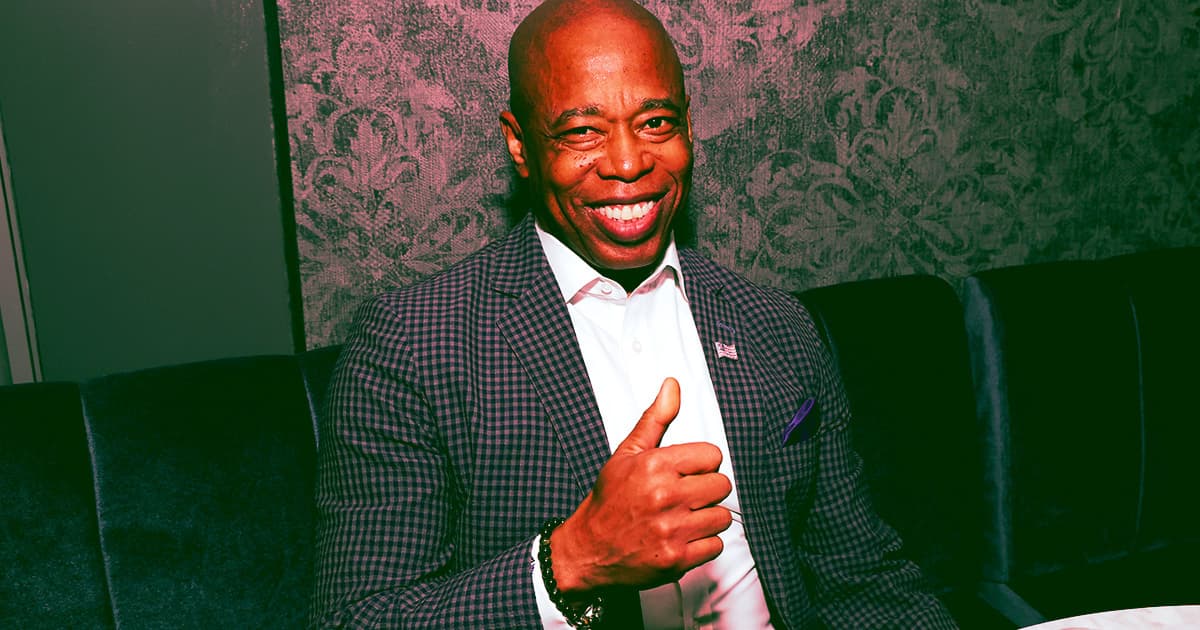

Unveiled last October, the MyCity chatbot was introduced as part of mayor Eric Adams' "New York City Artificial Intelligence Plan," described in a press release as a "comprehensive" government effort to "cement" the city's "position as a leader in the responsible use of innovative artificial intelligence (AI) technology." The AI bot, which is powered by tech by Microsoft, was central to this rollout, and city officials lauded the bot's ability to help small business and property owners more effectively navigate complicated legal questions.

"I look forward to seeing how the MyCity AI chatbot unlocks the potential of small-business owners across our city," the city's First Deputy Mayor Sheena Wright said in a statement at the time," allowing them to focus on what they do best — growing their businesses — while government does its part in making it easier to 'Get Stuff Done.'"

But as it turns out, the MyCity bot appears to be offering absolutely terrible advice.

When the Markup journalists put the AI — which notes on its website that it draws information "published by the NYC Department of Small Business Services" — through its paces, they discovered the service was consistently spitting out backward legal advice that confidently encouraged users to break the law.

As the Markup notes in its report, the bot fumbled several questions regarding housing and business law, for example telling the journalists that "buildings are not required to accept Section 8 vouchers." This is resoundingly false. It's illegal for landlords to discriminate against tenants based on income, and rejecting a Section 8 voucher would qualify as income discrimination.

In another instance, when asked if it's legal for an employer to take a chunk of employee tips, the bot responded with a confident "yes, you can take a cut of your worker's tips." Once again, though, this is incorrect. As the Markup explains, bosses in some situations might be able to put an employee's tips towards minimum wage requirements, but that's vastly different from a boss being able to pull a percentage from a tip pile at whim.

People on social media quickly put the bot to the test and found more questions to which it provides egregiously wrong advice.

"My employee violated our policy by telling a coworker about a second set of books I keep for our boss," asked one user, in a prompt Futurism verified with the bot. "Can I fire him?"

"Yes, you can fire your employee for violating your policy by disclosing a second set of books to a coworker," it replied. "As an employer, you have the right to enforce your company policies and take appropriate action when an employee violates them. Disclosing a second set of books to a coworker can be considered a breach of trust and confidentiality, which may warrant termination."

A spokesperson for the NYC Office of Technology and Innovation reportedly defended the AI, telling the Markup that while it's still in its pilot phase, the MyCity chatbot "has already provided thousands of people with timely, accurate answers" about businesses. They also noted that the chatbot features adequate risk disclaimers, though it's unclear how effective any AI risk disclaimer is at dissuading users from taking information at face value.

"We will continue to focus on upgrading this tool," the spokesperson told the Markup, "so that we can better support small businesses across the city."

Sure! Anyway. While the questions this bot was created to answer might indeed be difficult to navigate, please don't use this tool to do it. It's dumb, you could do something illegal, and someone — say, a potential tenant you rejected based on their income situation, perhaps or an employee who's suddenly seeing their boss snag a percentage of their tip earning — could face damages as a result. Cheers to the inevitably looming "the government's AI told me it was fine to break the law" court filing.

More on chatbots being bad at their jobs: Airline's Chatbot Lies about Bereavement Policy after Passenger’s Grandmother Dies

Share This Article